Local Research Tool Agent with Ollama, Memory, and External Utilities

Tool-enabled research agent that combines local language model reasoning with calculators, search APIs, and custom tools for grounded analysis.

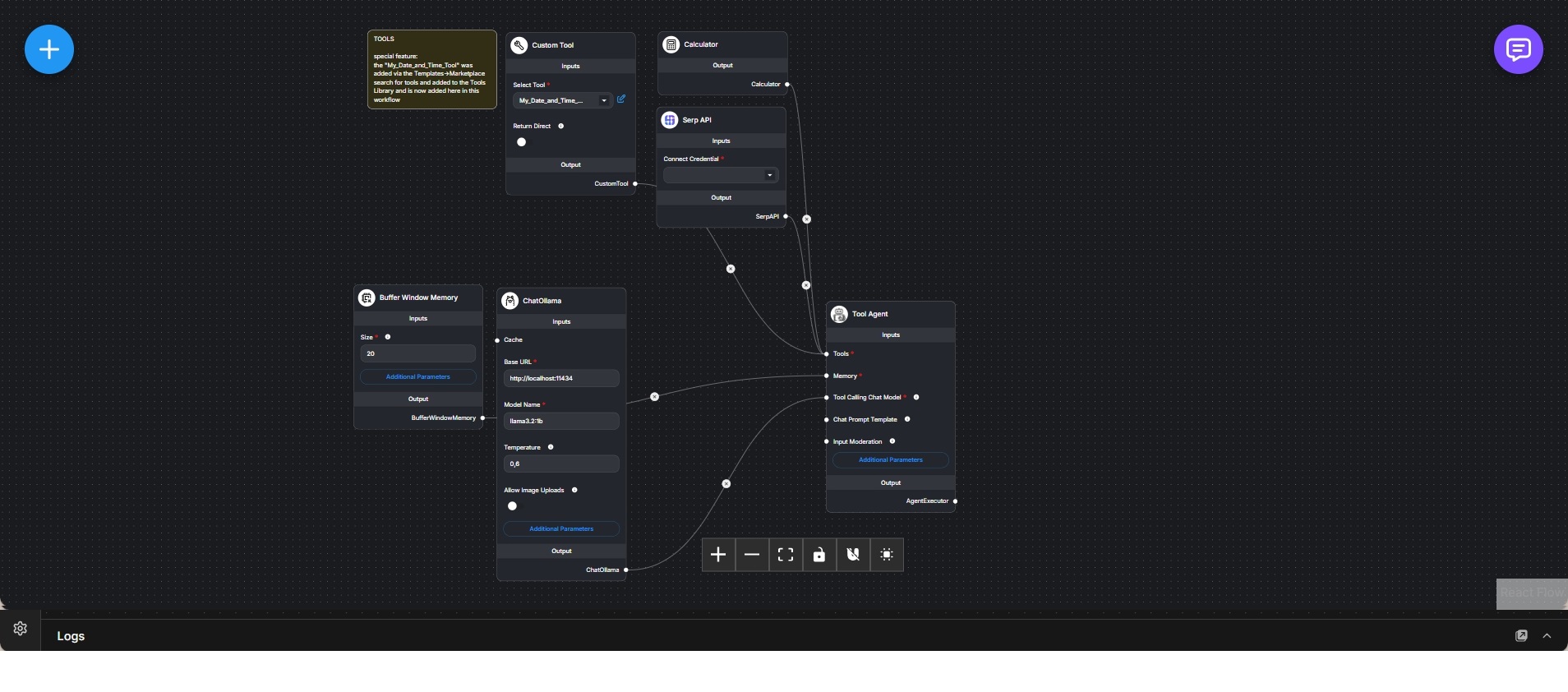

This workflow implements a tool-augmented research agent designed to perform analytical and information-gathering tasks by combining local language model reasoning with deterministic utilities and external data sources.

A locally hosted Ollama chat model acts as the agent’s core reasoning engine. The model operates with moderate temperature settings to balance factual accuracy and flexible reasoning, while remaining fully local and self-contained.

A buffer window memory component provides short-term conversational context, allowing the agent to track recent user queries, intermediate results, and tool outputs within a session. This enables coherent multi-step reasoning without persistent storage.

The agent is configured as a Tool Agent, giving it access to a curated set of tools. These include a calculator for deterministic arithmetic, a SERP API for real-time web search, and a custom tool that exposes additional logic such as date and time handling. Each tool is explicitly registered and made available for model-driven invocation.

During execution, the agent decides when to call tools based on the user’s request and the intermediate reasoning state. Tool outputs are fed back into the agent’s context, allowing subsequent reasoning steps to build on verified data rather than model assumptions.

This workflow demonstrates a key agent design principle: using language models for orchestration and decision-making, while delegating precise computation and real-world data retrieval to specialized tools. The result is a research assistant capable of grounded answers, reproducible calculations, and up-to-date information retrieval.

It is well suited for research tasks, analytical assistants, exploratory workflows, and scenarios where correctness and external validation are more important than purely generative responses.