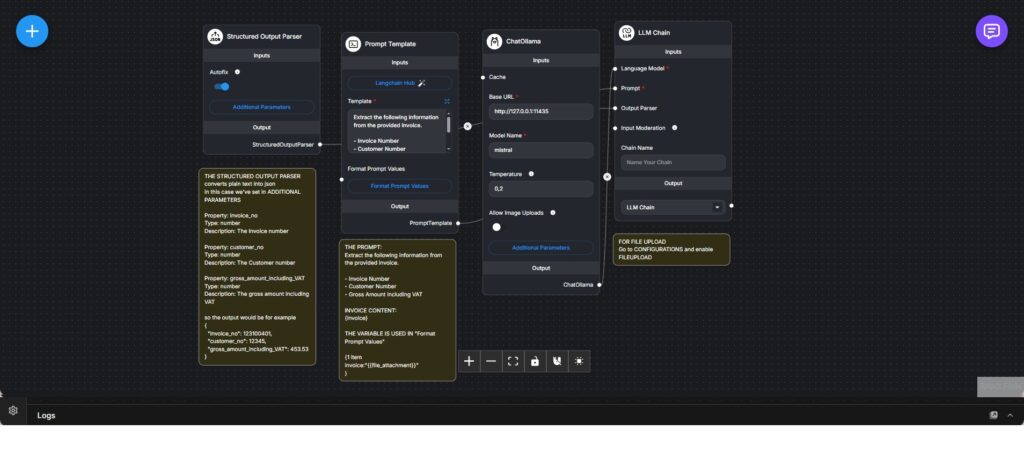

Local Invoice Analysis Workflow with Ollama and Structured Output

Self-hosted document analysis workflow that extracts structured invoice data using a local Ollama language model and schema-based output parsing.

This workflow implements a fully local invoice analysis pipeline designed to extract structured data from invoice documents without relying on external cloud-based models. It prioritizes determinism, privacy, and predictable output for downstream automation.

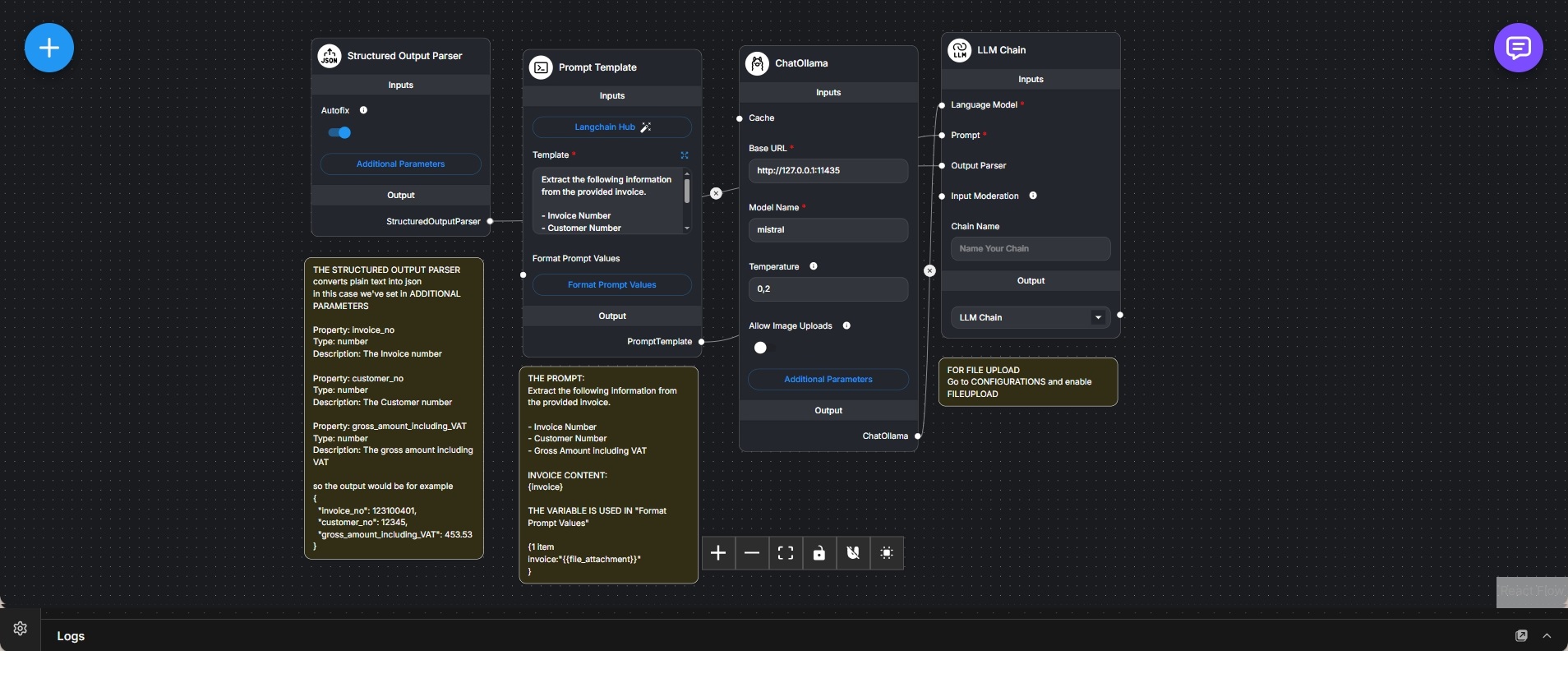

An uploaded invoice document is injected into a prompt template that explicitly instructs the language model to extract predefined fields such as invoice number, customer number, and gross amount including VAT. The prompt is deliberately narrow in scope to minimize ambiguity and reduce hallucinated output.

A structured output parser defines a fixed schema for the expected response. Each field is typed and documented in advance, allowing the free-form model output to be normalized into a strict JSON structure. Automatic fixing is enabled to handle minor formatting inconsistencies produced by the model.

The Ollama-hosted language model is invoked through an LLM chain that combines the prompt template, model configuration, and output parser into a single execution step. Model temperature is kept low to favor accuracy and consistency over generative creativity.

The final result is a clean, machine-readable object suitable for accounting systems, validation pipelines, or further automation steps. The workflow cleanly separates document input, extraction instructions, model execution, and output normalization.

This setup is well suited for invoice processing and document digitization workflows where data privacy, local execution, and structured output are more important than conversational flexibility.