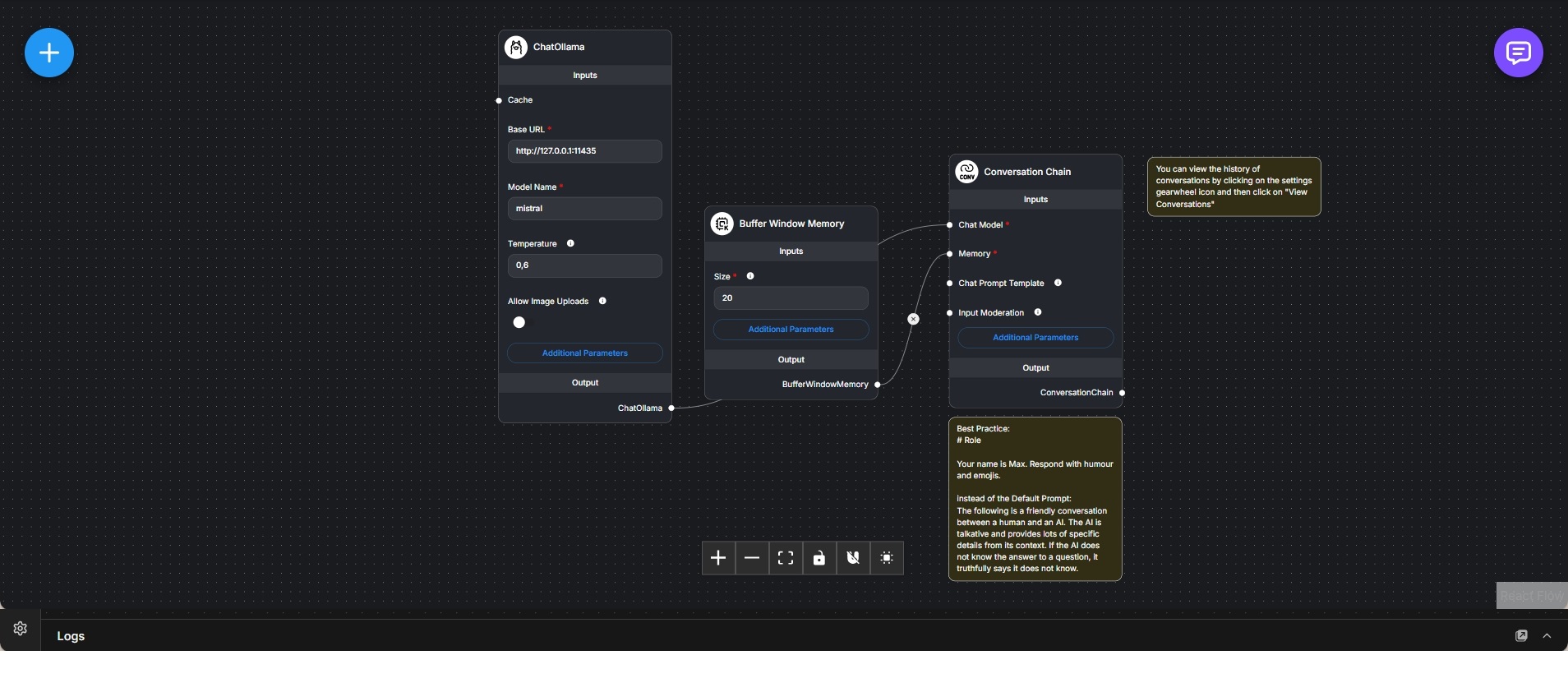

Local Chat Interface with Ollama and Conversation Memory

Local chat workflow that combines an Ollama-hosted language model with window-based memory for conversational interaction.

This workflow implements a local, ChatGPT-style conversational setup using an Ollama-hosted language model. A chat model node connects to a locally running Ollama instance and serves as the core language generation component.

To support multi-turn conversations, a buffer window memory component is used to retain a fixed number of recent messages. This allows the system to maintain short-term conversational context without storing long-term history or external state.

The chat model and memory are combined through a conversation chain, which manages prompt construction, message history, and response generation. This chain acts as the central interaction layer, ensuring that each new user message is evaluated in the context of recent exchanges.

Prompt behavior and response style can be customized through a prompt template, allowing the workflow to define tone, role, or behavioral constraints independently of the underlying model.

The setup provides a lightweight, fully local conversational agent suitable for experimentation, prompt testing, or as a foundation for more advanced systems that later add retrieval, tools, or orchestration layers.