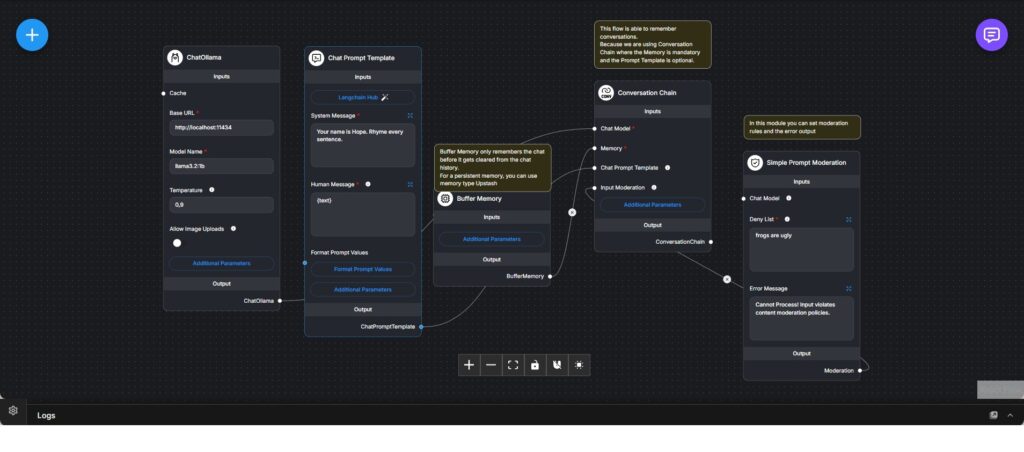

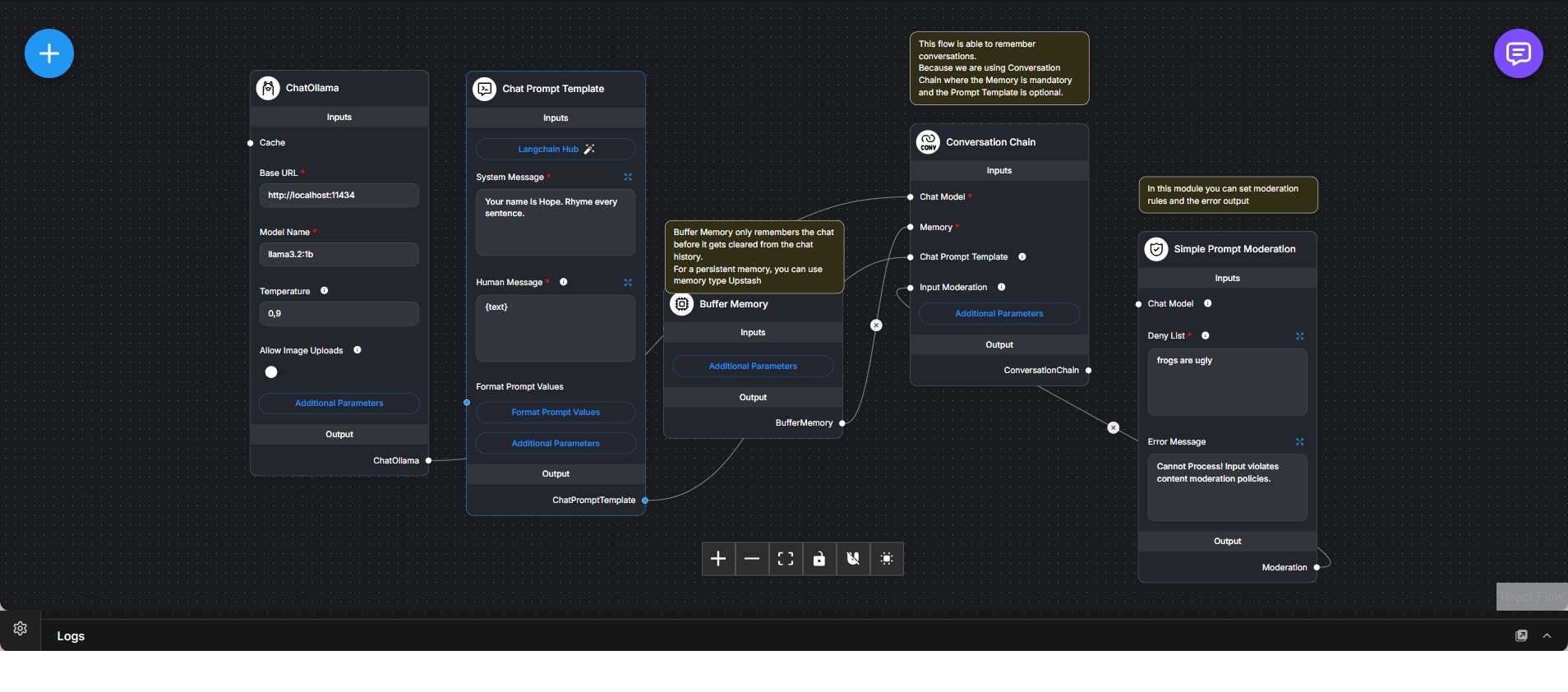

Conversational Chatbot with Memory and Input Moderation

Local conversational workflow that combines an Ollama-based chat model, short-term memory, prompt-controlled behavior, and explicit input moderation.

This workflow implements a controlled conversational chatbot using a locally hosted Ollama language model. The chat model acts as the core generation component and is configured with custom parameters to control tone and response behavior.

A chat prompt template defines the system role and behavioral constraints of the assistant, allowing consistent personality or stylistic rules to be applied across all interactions. User messages are injected into this template before being processed by the model.

To support multi-turn conversations, a buffer-based memory component is included. This memory retains a limited window of recent messages, enabling contextual continuity while avoiding long-term persistence. The memory is managed through a conversation chain, which ensures that each response is generated with awareness of recent dialogue.

An input moderation module is integrated into the workflow to enforce content rules before execution proceeds. Incoming messages are evaluated against a defined deny list, and requests that violate moderation rules are intercepted with a controlled error response instead of reaching the model.

The conversation chain acts as the central coordination layer, combining model output, memory handling, prompt logic, and moderation into a single interaction flow. This design provides a balance between conversational flexibility and explicit control over behavior and safety.

The workflow is suitable for local chatbot deployments that require memory, personality definition, and basic moderation without relying on external cloud services.