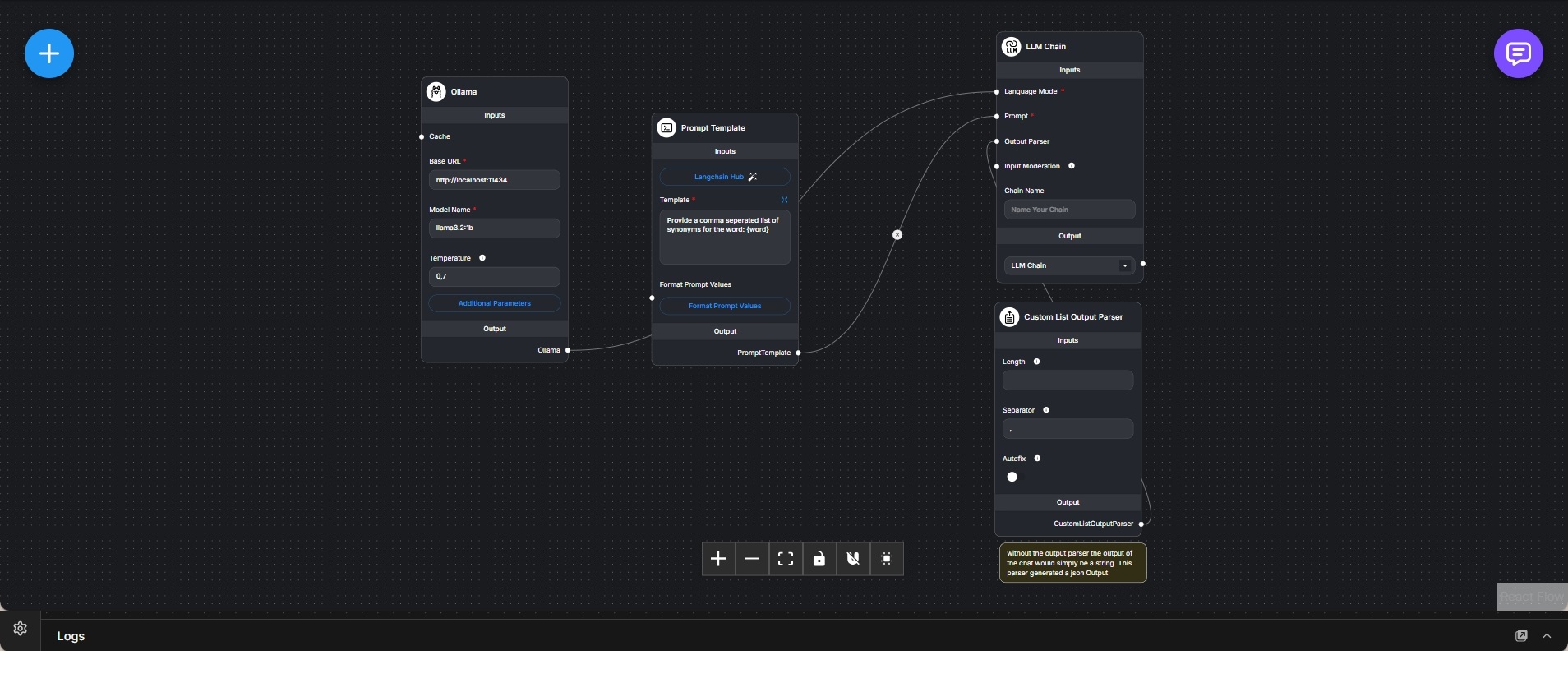

Structured List Generation with Ollama and Custom Output Parser

Local workflow that converts a free-form language model response into a structured list using a custom output parser.

This workflow demonstrates how to transform an unstructured language model response into a predictable, machine-readable format using a custom output parser. It is intentionally narrow in scope and focuses on output control rather than conversation, memory, or agent behavior.

A locally hosted Ollama model serves as the language model backend. The model is prompted via a simple template that instructs it to return a comma-separated list of synonyms for a given input word. The prompt is designed to produce natural language output while remaining easy to parse.

The LLM Chain node combines the prompt template and language model into a single execution unit. By default, the model would return a plain string containing the generated synonyms.

A Custom List Output Parser is attached to the chain to normalize the response. This parser splits the model output using a defined separator and optionally enforces a fixed list length. Autofix can be enabled to improve robustness when the model output slightly deviates from the expected format.

The final result is a structured list (JSON array) instead of raw text. This makes the output immediately usable for downstream automation, validation, UI rendering, or integration with other systems.

This workflow highlights an important design pattern: using language models for generation while delegating structure enforcement to deterministic parsing components. It is ideal for tasks such as keyword expansion, taxonomy generation, tag extraction, and controlled content pipelines.