Customer Support Agent with Vector Retrieval and Tool Execution

Retrieval-augmented customer support agent that answers questions using a vectorized knowledge base and controlled tool execution.

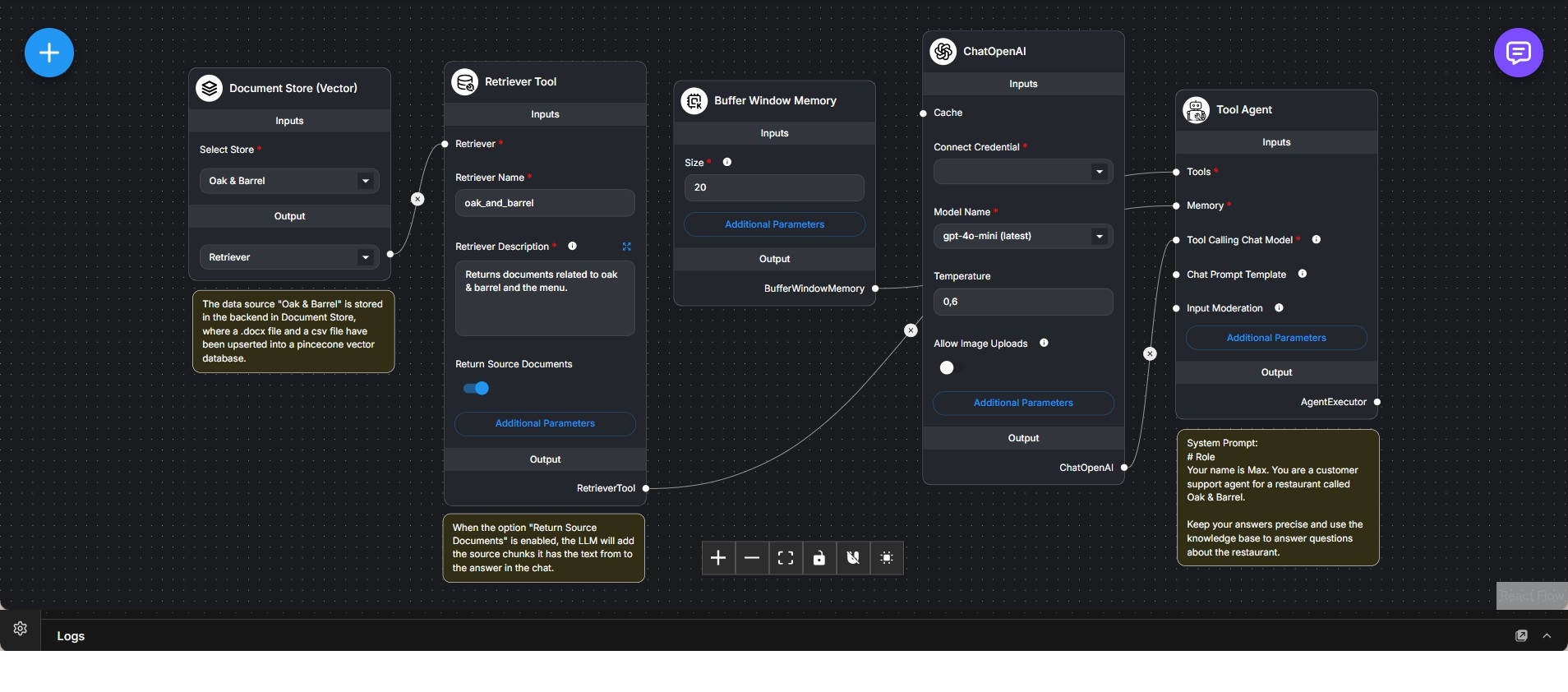

This workflow implements a customer support agent that combines retrieval-augmented generation (RAG) with explicit tool usage. It is designed to answer user questions using a predefined knowledge base rather than relying solely on model inference.

A document store backed by vector embeddings serves as the primary knowledge source. Domain-specific documents are indexed and retrieved through a retriever tool, which returns the most relevant content chunks based on the user’s query. These retrieved documents provide grounded context for the agent’s responses.

A buffer window memory component maintains short-term conversational context, enabling follow-up questions to be interpreted correctly without persisting long-term state.

The chat model is configured for tool calling and connected to a tool agent, which acts as the orchestration layer. This agent decides when to invoke the retriever tool and incorporates retrieved source documents directly into the response generation process. When source document return is enabled, the model can explicitly reference retrieved text to support its answers.

A system prompt defines the agent’s role as a customer support representative for a specific business, constraining tone, scope, and response style. This ensures that answers remain concise, relevant, and aligned with the domain knowledge stored in the vector database.

The workflow cleanly separates knowledge storage, retrieval, memory, and agent reasoning, making it suitable for production-style customer support scenarios where accuracy, grounding, and controlled behavior are required.