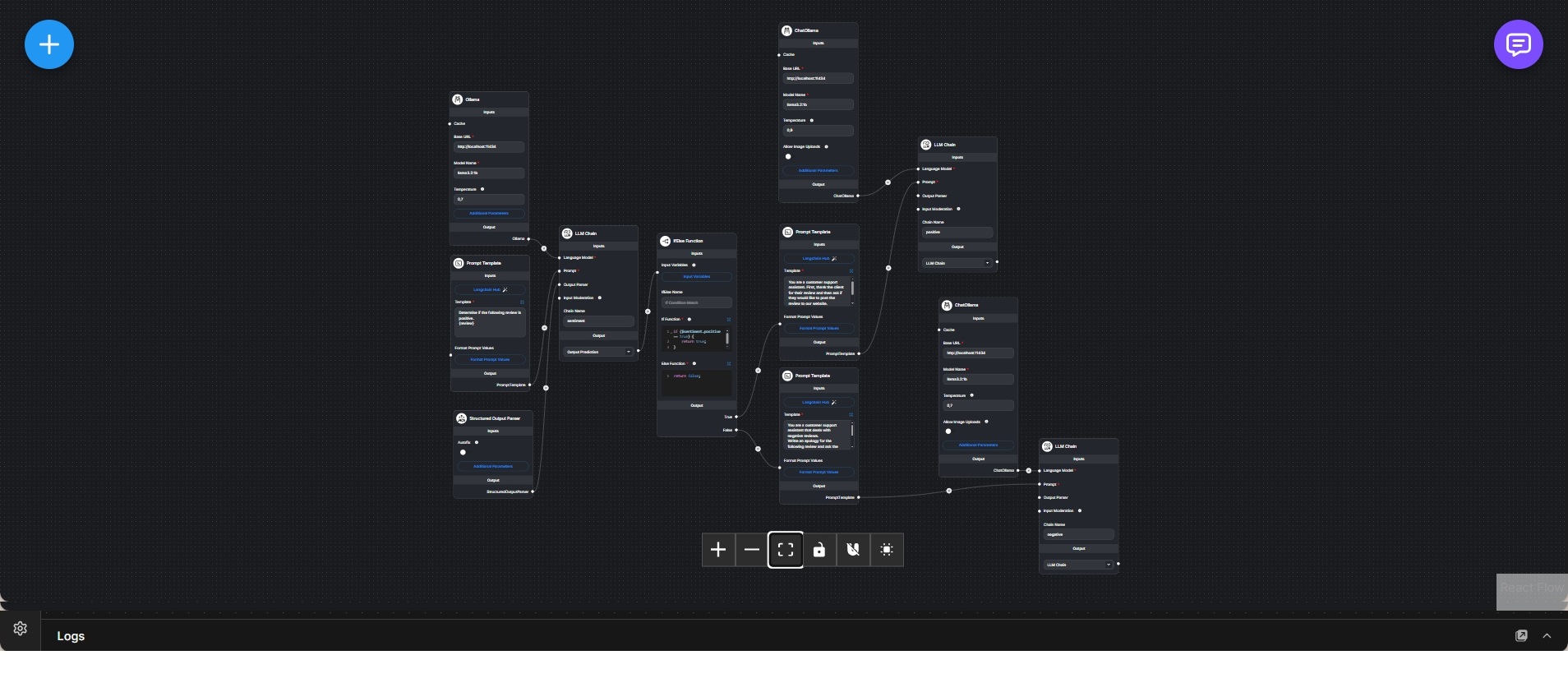

Conditional Response Workflow with Ollama and Structured Output

Multi-branch Flowise workflow that evaluates user input and generates different structured responses based on conditional logic.

This workflow implements a conditional chat pipeline that dynamically changes its behavior based on the content of the user’s input. It is designed to demonstrate controlled branching, structured output parsing, and prompt specialization within a single Flowise graph.

At the core of the workflow, a locally hosted Ollama language model is used for text generation. User input is first processed through a prompt template and an LLM chain, which produces an intermediate structured response. A structured output parser is then applied to normalize the model output into a predictable format that can be evaluated programmatically.

A conditional function node inspects the parsed result and determines which execution path to follow. Based on this decision, the workflow routes the request into different prompt templates and LLM chains, each tailored to a specific response style, task, or intent category.

Each branch uses its own prompt configuration and language model invocation, ensuring that responses remain context-appropriate while still sharing the same underlying model infrastructure. This separation allows the workflow to handle multiple behaviors—such as classification, explanation, or task-specific responses—without collapsing them into a single prompt.

The overall design emphasizes explicit control over model behavior, making the workflow suitable for scenarios where deterministic routing and predictable output structure are required, rather than free-form conversational responses.