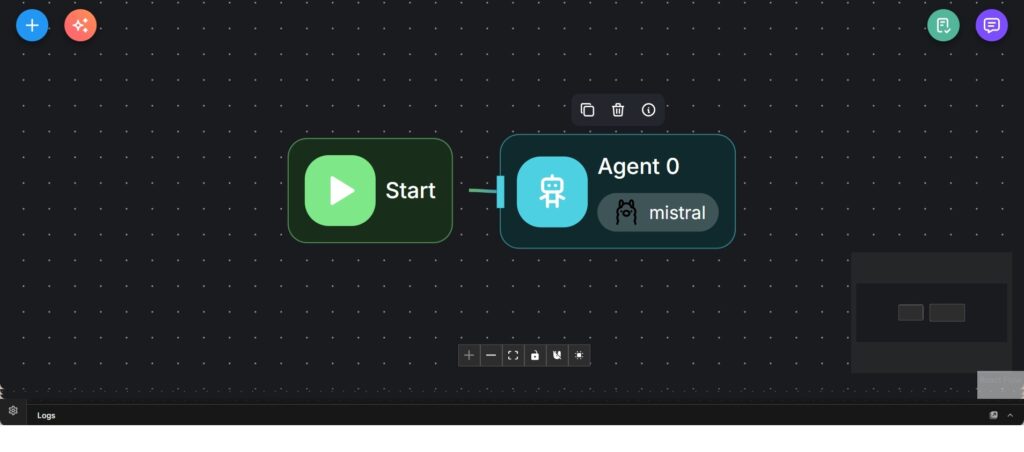

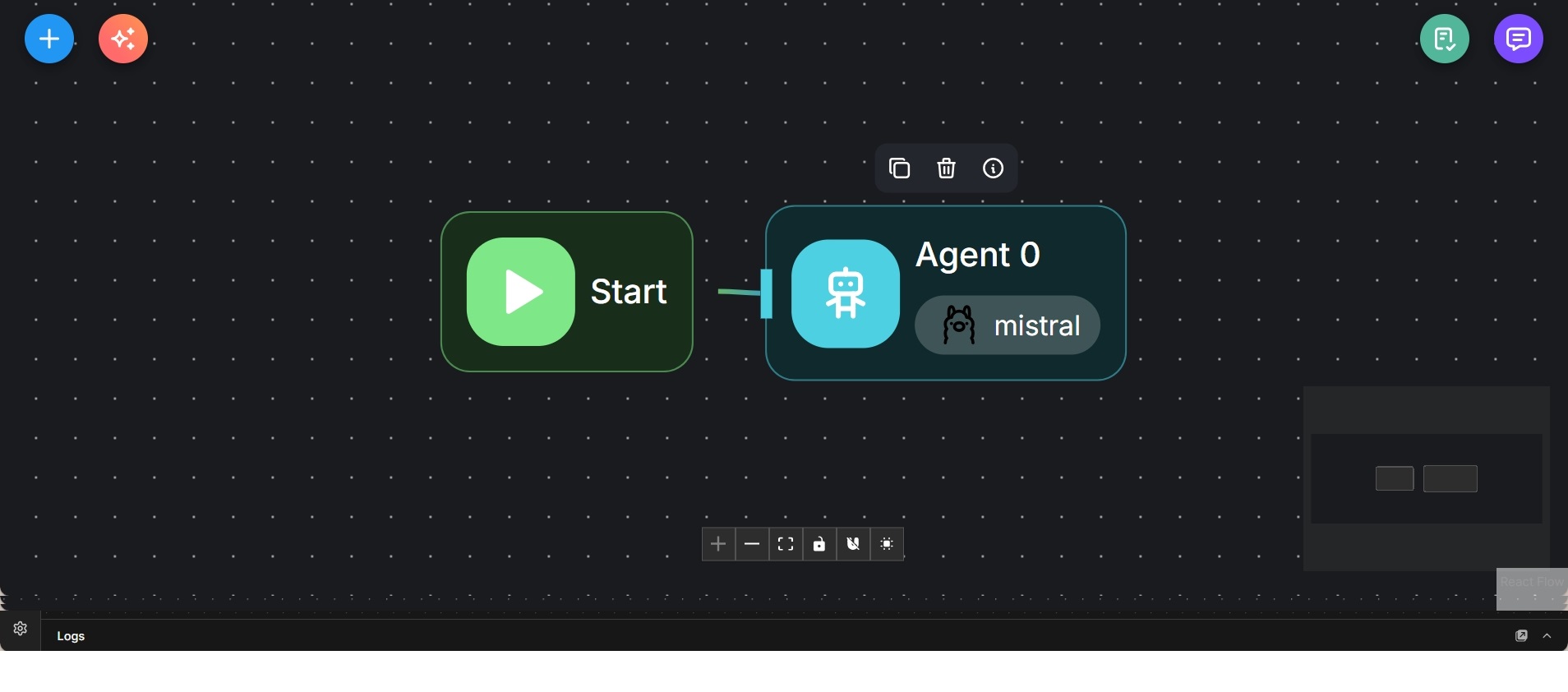

Minimal Local Chat Agent with Mistral Model

Single-agent Flowise workflow that runs a local language model for direct chat-based interaction.

This workflow represents a minimal Flowise setup built around a single AI agent. Execution starts immediately and forwards input directly to the agent without any additional planning, routing, or iteration layers.

The agent uses a locally hosted Mistral model for language understanding and response generation. There are no external tools, retrieval steps, or sub-agents involved, keeping the interaction strictly model-driven.

The purpose of this workflow is to provide a clean baseline for local LLM interaction. It serves as a foundation for testing model behavior, validating prompts, or acting as a starting point for more complex agent graphs that introduce memory, retrieval, or orchestration at a later stage.

The structure emphasizes simplicity and predictability, making it easy to reason about model output without interference from additional workflow components.