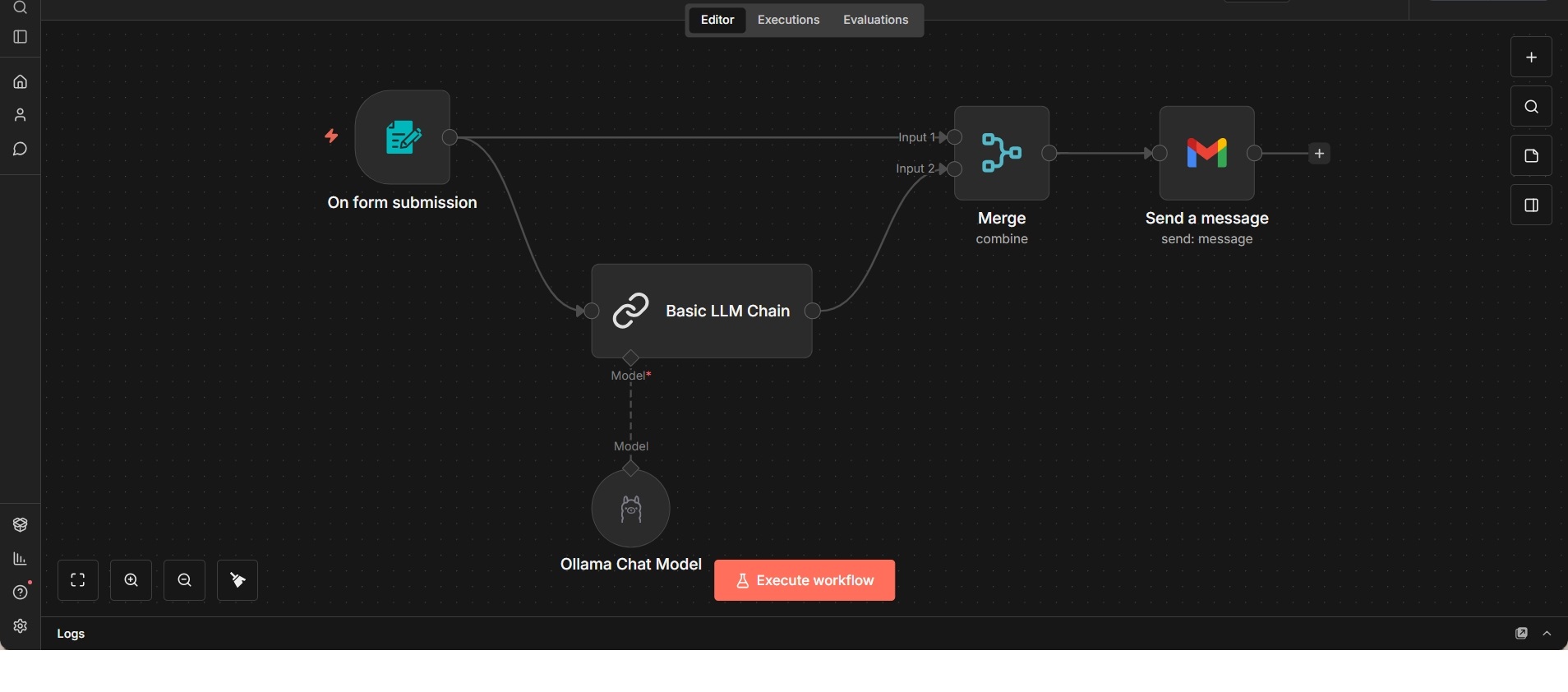

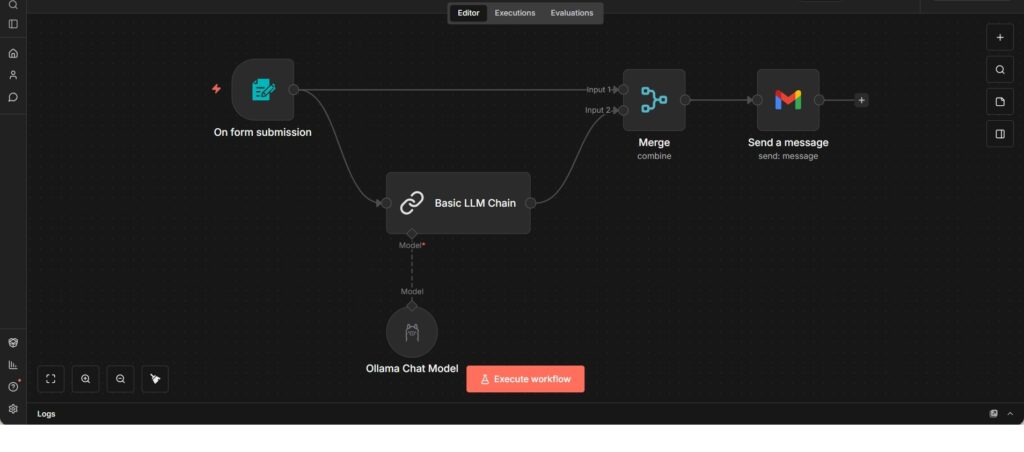

Form-Based LLM Processing with Email Output

Simple workflow that processes form submissions with an LLM and sends the result via email.

This workflow demonstrates a straightforward LLM-driven processing pipeline triggered by a form submission. It is designed to take structured user input, pass it through a basic language model chain, and deliver the resulting output via email.

When a form is submitted, the raw input is forwarded directly to a basic LLM chain backed by a locally hosted Ollama chat model. The model processes the input according to the configured prompt or logic and produces a textual response.

The original form data and the model-generated output are then merged into a single payload. This combined result is sent out using an email action, making the workflow suitable for lightweight analysis, feedback generation, classification, or enrichment tasks where results need to be delivered asynchronously.

The workflow intentionally avoids additional orchestration or persistence layers. Its purpose is to showcase a minimal, end-to-end LLM integration that connects user input, model processing, and external communication in a clear and reproducible way.

You must be logged in to post a comment.